Applications

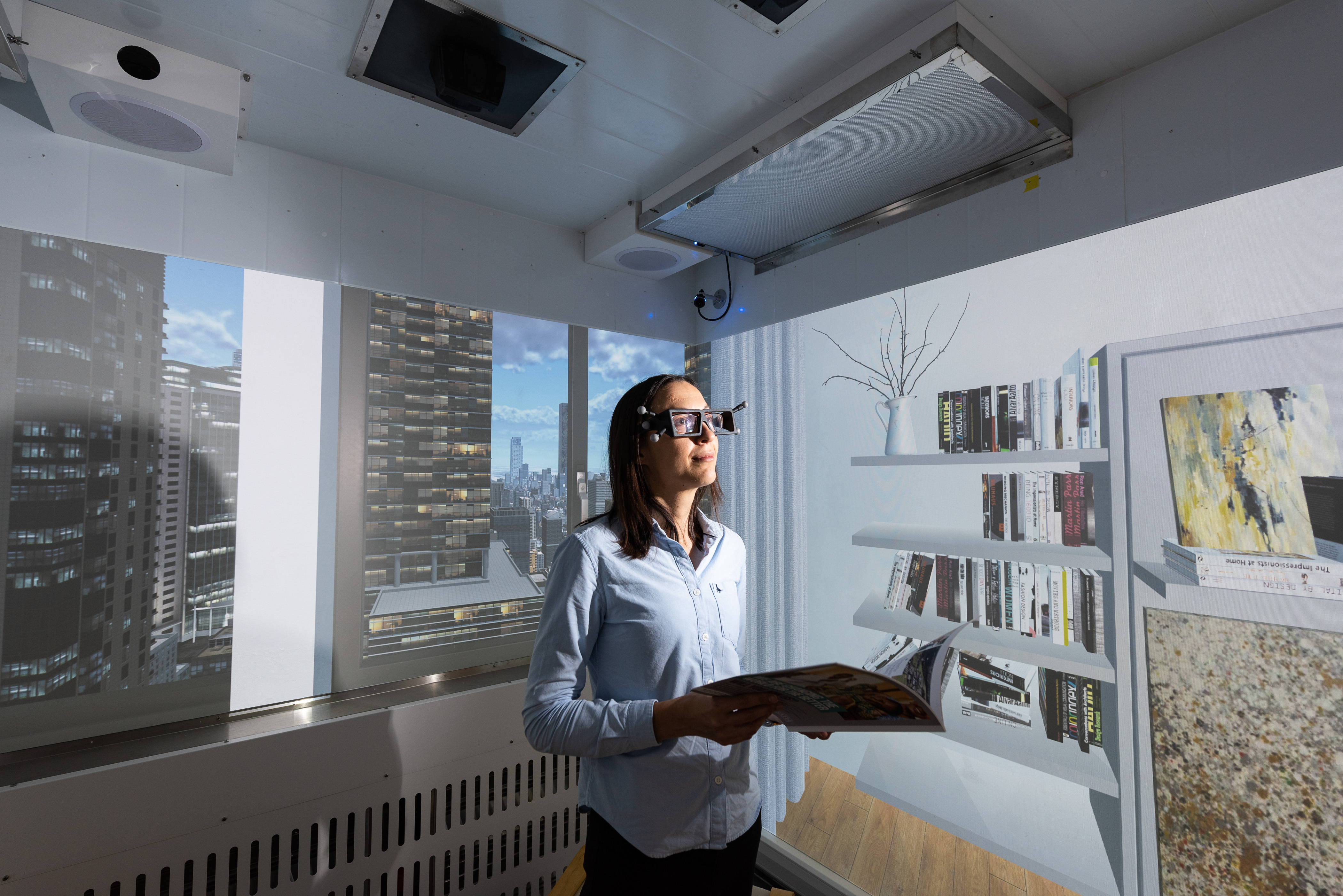

The VSimulators at Bath University is designed primarily as a research tool to conduct experiments with real humans in the loop. It is already being used to immerse people in a range of lifelike environments, in order to study their reactions to different structures, including swaying skyscrapers and bridges. Research teams have already identified over 50 potential applications for the facility, including immersive VR game development, physical rehabilitation and driverless vehicle design, bringing together varied industry and academic sectors. The space can be realistically configured as an office, apartment, hotel room or hospital ward, giving the researchers the ability to create convincing ‘mixed reality’ simulations.Installation of the VR chamber

The projected virtual reality on the walls of the 3 x 4 metre chamber is combined with motion-tracking glasses and programmed to adjust the visual and audio sensory output according to the time of day and building height. The front projection is delivered over three active display “faces” combining to form a continuous image effect inside the specialist chamber mounted upon the motion system. The visual requirements that were outlined needed an immersive virtual environment that could be projected around three internal screen faces (walls) upon the interior of the specialist motion enabled chamber. The concept at its basic form was to offer computer generated real-time interactive visuals of a realistic looking cityscape combined with partial internal views of a room space that virtually extended the internal dimensions of the chamber volume itself.

Firstly, ST Engineering Antycip (formerly known as Antycip Simulation) had to have content created in terms of a 3D modelled city scape environment that could be rendered in real-time frame rates at high resolution.

It would also have to be conforming to a set criteria outlined by the University, which defined what they wanted to see in terms of the richness of the virtual world.

Working with our technology partners at Real-Media, a Unity framework was produced that delivered content which could be switched during run-time to address different perspectives in altitude from the virtual buildings elevated synthetic views points.

A control interface to switch and cycle through the options to be selected for the experiment scenario was created. The entire visual then needed to be enabled for spatial tracking and multichannel synchronisation. To that purpose, a license from MiddleVR was provided and configured by Antycip's engineering team, to ensure the experience could address the hardware accordingly and provide the realistic experiences needed.

The visual requirements that were outlined needed an immersive virtual environment that could be projected around three internal screen faces (walls) upon the interior of the specialist motion enabled chamber. The concept at its basic form was to offer computer generated real-time interactive visuals of a realistic looking cityscape combined with partial internal views of a room space that virtually extended the internal dimensions of the chamber volume itself.

Firstly, ST Engineering Antycip (formerly known as Antycip Simulation) had to have content created in terms of a 3D modelled city scape environment that could be rendered in real-time frame rates at high resolution.

It would also have to be conforming to a set criteria outlined by the University, which defined what they wanted to see in terms of the richness of the virtual world.

Working with our technology partners at Real-Media, a Unity framework was produced that delivered content which could be switched during run-time to address different perspectives in altitude from the virtual buildings elevated synthetic views points.

A control interface to switch and cycle through the options to be selected for the experiment scenario was created. The entire visual then needed to be enabled for spatial tracking and multichannel synchronisation. To that purpose, a license from MiddleVR was provided and configured by Antycip's engineering team, to ensure the experience could address the hardware accordingly and provide the realistic experiences needed.